|

|

|

Speakers > Talks

Speakers and Abstracts

Customizable user integrated Energy Management in Smart Buildings Dr. Florian Allerding, Karlsruhe Institute of Technology - KIT Various changes in energy production and consumption lead to new challenges for design and control mechanisms of the energy system. In particular, the intermittent nature of power generation from renewables asks for significantly increased load flexibility to support local balancing of energy demand and supply. This talk focuses on a flexible, generic energy management system for Smart Buildings in real-world applications, which is already in use in households and office buildings. The interactive integration of the Smart Building’s resident is essential to reduce comfort losses to a minimum. For that, we present a generic Energy Management Panel providing the connection between the resident and the Energy Management System and its integration into the Energy Management System of a real Smart Building.

Early risk assessment for Human Factors Advanced Driving Assistance Systems analysis Dr. Xavier Chalandon, Renault, France. Early assessment of Advanced Driver Assistance Systems (ADAS) requires to question and to strengthen the safety issues of the intended functions to be developed. Drawing inspiration from Human Factors cockpit certification methods in aeronautics, we will propose a systemic and systematic way to search for potential hazard associated with: The intended driving behavior

The search is to be done in a systematic way by a team of specialists from different backgrounds, so as to minimize the probability that any essential factors are overlooked. The search is assisted by putting up matrixes of events and guide words. The output should be a set of DVE (Driver-Vehicle-Environment) conditions requiring further investigations during advanced engineering and development phases.

Brain-spinal interfaces to augment locomotor ability after injury

Dr. Jack DiGiovana, Ecole Polytechnique Fédérale de Lausanne (EPFL), Switzerland. Brain-machine interfaces create artificial bridges to connect a person's brain to external devices; these prosthetics can partially replace lost functions. Brain-spinal interfaces use a different philosophy - they aim to restore functions by exploiting the existing spinal circuitry. In this talk, I will illustrate the multiple facets of such interfaces. Specifically, I will show examples of brain states which correspond to locomotion and spinal stimulation strategies which create complex locomotion.

Automated vehicles: where are they heading to?

Dr. Ebru Dogan, Institut VeDeCom, Versailles, France Vehicle automation constitutes one of the leading items on the agenda of transport researchers, car manufacturers, and policy makers. One of the criticisms by human factors community directed at system designers is that the role of the human driver is not clearly defined at the design process. There is a rising concern that increasing levels of automation introduces additional complexity into the driving task while impairing driver’s situation awareness. This talk will address issues related to levels of automation and driver situation awareness. The aim will be to stir a discussion about the evolution of the role of human driver in an increasingly automated vehicle.

Control of functional electrical stimulation for upper-limb stroke rehabilitation Prof. Timothy A. Exell, Cardiff Metropolitan University, UK. and Chris T. Freeman, Katie L. Meadmore, Ann-Marie Hughes, Emma Hallewell, Eric Rogers, and Jane H. Burridge. Stroke is a leading cause of upper-limb disability worldwide. Functional electrical stimulation (FES) has been used effectively in stroke rehabilitation to assist patients in moving their impaired limbs. Benefits of FES are greatest when combined with maximal voluntary effort from the patient to perform the movement. Therefore, the control challenge is to provide the right amount of FES to assist with movement, but so that maximal voluntary effort is also encouraged. Work at the University of Southampton over the past decade has developed an upper-limb rehabilitation system that incorporates Iterative Learning Control (ILC) and FES with robotic support. This work followed three main research programs starting with the case of planar reaching, progressing to 3D virtual reality reaching and most recently, functional reach and grasp tasks. The rehabilitation system incorporates a simplified dynamic model of the arm-support system. A proportional-integral-derivative controller is currently employed in the system in parallel with phase-lead ILC, using joint angle reference signals modeled from unimpaired movement. ILC is ideally suited to control the FES signals for each muscle group, due to the repetitive performance of the rehabilitation tasks used in the rehabilitation system. Performance error from each trial is used by the ILC to update the FES control parameters of the following trial in an attempt to reduce error. This approach also reduces the stimulation following successful performance, increasing the effectiveness of rehabilitation by requiring maximal patient effort. Each of the three research programs has resulted in clinical trials, with significant improvements seen in performance accuracy and clinical assessments of arm movement across all patients.

Modeling human-machine interaction in the cockpit Prof. Ronald A. Hess, University of California, Davis, US The human-machine interaction involved in controlling an aircraft requires the continuous integration of visual, proprioceptive, and vestibular information on the part of the pilot. In addition to the role of an active controller, it is increasingly common to find the human pilot required to function as a “system manager.” Indeed, recent aircraft accidents such as the crash of Asiana Airlines Flight 214 at San Francisco International Airport on 6 July, 2013 has questioned the manner in which the human pilot interacts with modern, complex, flight management systems, as well as the design philosophy of these systems, themselves. This talk will briefly review extant control-theoretic models of the human pilot, and possible means for extending these models to describe human interaction with complex dynamic systems such as aircraft in which human anticipatory behavior is not only desirable but absolutely essential for safe flight operations. The possible role played by the human pilot’s internal model of the system being controlled/managed in achieving successful anticipatory behavior will be emphasized, as well as the resulting mental workload that such behavior may produce.

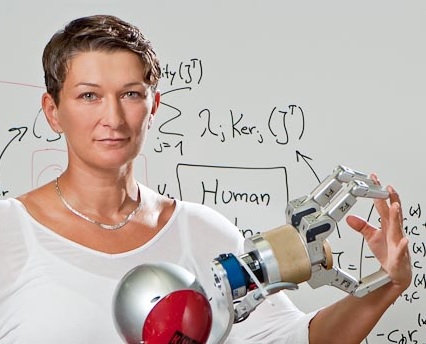

Control in physical human-machine interaction

Prof. Sandra Hirche, Technische Universität München, Germany

Joint System as a guiding approach for driver-automation system design

Johann Kelsch, German Aerospace Center (DLR) Developing highly automated road transport systems is becoming more and more complex. Due to the raising number of ‘differently intelligent’ assistance functions inside and outside the oncoming highly automated vehicles the challenge of the systems’ usability becomes crucial. The known Joint System approach uses knowledge from the research area of Human Factors as human related problems within a Joint System. It develops the knowledge further toward new concepts for system solutions. Joint System approach can reduce the system’s complexity raising the usability and reducing costs and development times. In this presentation the work completed and planned in several EU-projects will be presented as components for a Joint Driver-Automation System. In respect to the systematic exploration and design of observability and controllability of such a system several examples will be presented. The examples are different HMI concepts and strategies, such as ‘safety shield’, ambient display, driver decoupling, automation levels and cooperation modes. For some examples, experimental data will be presented and discussed.

What Does the Surgeon Really Need? Prof. Thomas Lendvay, Seattle Robotic Surgery Program (Co-Director), and University of Washington, Center for Clinical and Translational Research. Medical errors rates remain unacceptably high, ranking among the top 10 to 15 causes of death. Surgery is responsible for a disproportionate amount of these errors. While clinicians have worked hard to address this, existing techniques focused on human improvement are limited at best. Meanwhile, surgical robotics has transformed modern human surgery over the last decade and thus opened it up to new solutions from fields like cyberphysical systems. This promises to usher in a new era in surgery that may finally solve long-standing problems. Yet, medical robotics is in its infancy and most surgical robotic technology merely aims to restore aspects that were formerly present in non-robotic surgery, such as haptics. In this talk, Dr. Lendvay will highlight some of the “grand challenges” of surgery and provide concrete examples from the operating room. The talk will include topics such as detection and warnings of imminent adverse events, automated avoidance of human error, super-human sensing and diagnostics, and the potential role of surgeon-robot shared control. These topics will map to traditional areas of focus for the CPS community but may also motivate new ones. This presentation will not provide answers, but generate discussion for the direction of engineering efforts to more than incrementally enhance medical robotics and healthcare.

HemiGaitEm : an embedded system for gait parameters acquisition for hemiplegic persons

Tong Li, Gilbert Pradel, Nicolas Roche, ENS Cachan-Garches Hospital, France The cerebrovascular accidents (CVA or strokes) are the leading cause of adult disability and the second leading cause of dementia, both in high-income and in developing countries. 130 000 French are affected every year by a stroke. 15 million people from worldwide are affected by stroke. Two-thirds of stroke victims remain with hemiplegia. And meanwhile, they got very serious locomotion problems that limit their autonomy during the daily life. In regard to getting complete pathology information for every patient, as well as improving the treatment (physiotherapy, toxin injection, etc.), it becomes more and more important to acquire the gait parameter knowledge which has been generated from hospital settings via quantified gait analysis experiments, and daily data storage displacement as well. It is also necessary for physicians to have a feedback on the effects of treatment applied to the patients to modify and/or improve it. The proposed system adopts 7 inertial measurement units arranged on the lower limbs for calculating the characteristic angles of the various segments. The results from this system have been compared to the reference variables which generated from our Vicon referencial equipment. And the low level of error founded between the reference and our embedded system data has been contributed to its validation. The target of this system is to be worn by the person to store and analyze gait parameters in everyday life displacements.

Advanced driving assistance systems for elder/disabled drivers Dr. Claude Marin-Lamellet, IFSTTAR, France, and Dr. Thierry Bellet, IFSTTAR, France. Advanced Driver Assistance Systems (ADAS) are designed to assist drivers taking over more and more sub tasks of driving as longitudinal and lateral control, emergency brake,... Sensors can see in advance probable dangers and warn the driver or directly act to avoid accidents. Adaptive driving assistance systems can be an option to prolong active driving in older or disabled people but they should be adapted to the user’s needs, have a good acceptance level and legibility from the drivers, especially considering the characteristics of older drivers. The challenge for the system manufacturer is to design reliable Driver Monitoring Functions to support future “adaptive” technologies specifically adapted to older drivers, being able to adjust their assistance to the current driving context and to the specific drivers’ characteristics, status, difficulties and help needs at this particular time. These “Driver Monitoring Functions” could identify older drivers' difficulties or risky behaviours, and adapt the driving assistance accordingly. In the frame of the SAFEMOVE project, a literature review has been conducted to identify the main specific needs of older drivers and to examine pre-existing studies having investigated the potential interest of driving assistance systems for seniors and the main outcome of this review will be presented. Moreover, from empirical data collected among a sample of 76 older drivers, a Human Centred Design approach of monitoring functions was implemented in order to (1) supervise older drivers behaviours, (2) detect driving errors or crtical situations, and (3) support real time adaptation of driving aids and Human-Machine Interaction modalities in accordance with the specific difficulties and needs of this group of drivers.

Hierarchical control of driving and human-machine cooperation Dr. Franck Mars, IRCCyN, CNRS & Ecole Centrale de Nantes, France. This talk will present a theoretical framework of how humans process information and control their behavior in a hierarchical way, from sensorimotor control to strategic planning with implicit and explicit procedural control in-between. This general framework will then be applied to vehicular control, which will serve as a guideline to discuss various types of driving assistance systems in terms of human-machine cooperation.

Prof. Berenice Mettler, Univ. of Minnesota, US Over most of last century the roles of operators in human-machine systems have been well delineated. Function allocation was dictated by a set of specific technological capabilities and mathematical modeling tools. Dramatic advances in computational techniques, sensors, actuators, broadens the functional capabilities into domains such as perception and cognition. These advances blur the human-system delineations and confronts researchers to lesser understood human functions. The paper will first review the historical trend in human-machine systems, discusses the key developments in theories of human modeling, their limitations and the new challenges using an information processing model. The second part of the paper will describe the modeling framework that emphasizes operator skills in representative tasks: spatial guidance and surgery. The paper proposes skill not in the classic way, i.e. as a reference for human capabilities, but to determine what motivates humans to invest in the acquisition and exercise of these skills. The goal is systems that can accommodate a broad range of skills and stimulate the development of skills within the human-machine system.

A Software for Robot Teleoperation Applications based on ROS

Dr. Alex F. Neves , Fernando Lizarralde, Ramon R. Costa, COPPE/Federal University of Rio de Janeiro,Brazil. This talk presents a software for robotic teleoperation named RobotGUI that iis been developed on top of ROS (Robot Operating System). RobotGUI can load libraries (Robot Packages), which gathers Components and Tools. Components are similar to ROS nodes, they represent the robots functionalities, interact with hardware and have the ability to communicate to others Components. Tools are windows in the Graphical User Interface (GUI) and can connect to Components, thus allowing the robot`s data visualization. All ROS`s tools can still be used during the development of robots. The GUI is better for the end user since it doesn`t require the knowledge about which ROS topics should be used to control the robot or see its data. Generic Tools and Components can be defined in a generic Robot Package so that they can be used by many robots, minimizing code reutilization. Some Tools are the Data Table, Device Management and Camera Viewer. The framework ROS is adressed since it is a key feature on the development. Some R&D is presented in order to give some background on teleoperation. As an example of difficult teleoperation, we describe a robot for Explosive Ordnance Disposal (EOD), named DIANE. Some enabling technologies are considered in order to make robots more suitable for teleoperation. Such technologies include SLAM (Simultaneous Localization and Mapping), collision avoidance techniques, and 3D virtual representation of the environment. The RobotGUI is been developed on two real robots, represented by 3 Robot Packages (one for each robot and a generic package), which are also presented to illustrate the software.

Thinking of users in the smarter energy systems Prof. Ritsuko Ozaki, Imperial College, London, UK. This talk discusses what happens after a new technology is adopted and how it is used in users’ everyday contexts. We look at technologies in general, rather than cyber systems, using examples from empirical studies conducted in the UK. This way we will be able to consider how users respond to adopted and implemented technologies and incorporate them into their daily practices. Do humans ever use technologies as designers expected? What and how can engineers and designers learn from people’s experiences?

Firing-rate models: A low-hanging fruit for the analysis of basal ganglia oscillations

William Pasillas-Lepine, L2S CNRS, Supelec, Université Paris Sud, France

Human-Machine Interaction in Space Image by NASA Prof. Stephen Robinson, University of California, Davis, US. The study of human-machine interaction involved in controlling human-crewed spacecraft, space robotics, and spacesuits for extra-vehicular activity (EVA - spacewalks) is in its infancy, with a rich variety of potential improvements in information-flow and failure-response as humans extend both their experience and reach beyond Earth. This talk will examine the U.S. Space Shuttle, the International Space Station (ISS), and both Russian and U.S. spacesuits as examples of the so-far rudimentary approach to human systems integration that has been applied to human space exploration. Manual control of the Shuttle and ISS robotic arms will be examined, as will be the U.S. EVA Mobility Unit (EMU). Particular emphasis will be on failure recognition and response for space vehicle electro/fluid/mechanical/sensor systems. Vehicle guidance and control, along with display design will also be considered, with space rendezvous/docking used as an example.

Measuring, Inducing, and Controlling Human Motions in a Rehabilitation Context Thomas Seel, TU Berlin, Germany and Thomas Schauer, Joerg Raisch, TU Berlin. Due to increasing incidence of stroke and neurological disorders, a growing population of patients suffers from lesions that result in motor deficits of the upper or lower limbs. In neuroprostheses, functional electrical stimulation (FES) is used to activate and support paretic muscles. But in order to generate functional motions, the stimulation must be adapted based on measurements of joint angles or similar motion parameters. In this context, both the real-time measurement and the control of FES-induced motion are major challenges. This talk highlights the potential of inertial sensors for real-time gait analysis with a focus on methods that allow automatic sensor-to-segment calibration from arbitrary motions by exploiting kinematic constraints. Furthermore, the limitations of FES-driven motion generation are outlined and learning control methods are presented that have proved capable of providing an effective, user-adaptive motion support. Finally, an intelligent gait neuroprosthesis is envisioned that combines these two key technologies. Experimental results and a live demonstration of key features illustrate the effectiveness of this approach.

An autonomous wheel-based stair-climbing principle and its potential for wheel chairs and assistive robots Bruno Strah, TU Darmstadt, Germany and Stephan Rinderknecht, TU Darmstadt, Germany. A short overview and a comparison of existing locomotion systems are given. From that follows a motivation for development of a new wheel-based principle, called Stair Climbing Device (SCD) which is capable of autonomous stair-climbing. It can be considered as a double inverted pendulum. In normal operation the system moves situation dependent either on two or on four wheels in ground contact. The presented hybrid dynamic model covers continuous-time dynamics, as well as state transitions, between different discrete state situations that occur during stair-climbing. Control design includes the states with two and four wheels in ground contact as well as the state transitions. The control algorithms were designed based on feedback linearization methods. The achieved results are presented on a real prototype as a sequence of motion. Those together illustrate stair-climbing. Advantages of this principle are illustrated and challenges for further research are given. Possible applications using this principle are described.

Enhancing situation awareness in process control Dr. Anand Tharanathan, Accenture, Chicago, US. Situation awareness is critical during complex operations. Process control demands varying levels of cognitive effort, and hence, it is critical to design systems that aid operators to perform at enhanced levels of situation awareness. In this presentation, the concept of situation awareness will be presented, and contextualized within process control and operations. In addition, a study will be presented that demonstrates how cognitive engineering principles can help to design advanced visualizations and decision support systems, and consequently enhance the situation awareness of operators during process control. Implications for further research will also be presented.

Risk analysis from the concept of dissonnance to identify the gaps from design and usage of automated systems Prof. Fréderic Vanderhaeggen, LAMIH, Université de Vallenciennes, France. The use of information systems such as on-board automated systems for cars presents sometimes operational risks that were not taken into account by classical risk analysis methods. Gaps between users’ knowledge, between the knowledge of the users and the designers of a given system occur and adapted methods are required to assess the risk of these gaps. Dissonance engineering is seen as a possible way to analyze risks related to conflicts between knowledge (Vanderhaegen, 2013, 2014). A cognitive dissonance is defined as an incoherency between individual cognitions or knowledge (Festinger, 1957). From the point of view of the cindynics, a dissonance is a collective or an organizational conflict related to incoherency of knowledge between persons or between groups of people (Kervern, 1995). A dissonance may affect different human factors such as behaviors, attitudes, ideas, beliefs, viewpoints, competences, etc. It occurs when something was, maybe, is or will be wrong. This may produce a discomfort induced by the detection, the treatment or the production of this dissonance and may be managed individually or collectively. A dissonance can also be due to the occurrence of important or difficult decisions involving the evaluation of several possible alternatives, to divergent viewpoints on human behaviors, to the occurrence of failed competitive or cooperative activities, to organizational changes that produce incompatible information, etc. Then, the updating or the refining of a given knowledge due to new feedback from field is required but this can also generate dissonances. The talk will detail an original approach to analyze risks associated to dissonances. A taxonomy of dissonances will be proposed and a possible implementation of a shared human machine knowledge based system will be presented to treat two kinds of dissonance: erroneous affordances and contradictory knowledge. Several practical examples from industrial processes or transport domains will be presented and discussed in order to illustrate the interest of this approach for identifying hazardous gaps between the design and the usage of a system. (References : FESTINGER, L. (1957), A theory of cognitive dissonance. Stanford, CA: Stanford University Press.; KERVERN, G.-Y. (1995), Eléments fondamentaux des cindyniques (Fondamental elements of cindynics), Economica Editions, Paris.; VANDERHAEGEN F. (2014). Dissonance Engineering: A New Challenge to Analyse Risky Knowledge When using a System. International Journal of Computers, Communications and Control, 9(6), pp. 670-679.; VANDERHAEGEN F. (2013). A Dissonance Management Model for Risk Analysis. 12th IFAC/IFIP/IFORS/IEA Symposium on Analysis, Design, and Evaluation of Human-Machine Systems, Las Vegas, Nevada, USA, pp. 381-401, August.).

Biomechanical Human Models : Towards the virtual conception and assessment of the mobility Philippe Vezin, Université de Lyon, Université Claude Bernard Lyon 1 and IFSTTAR, Bron, France. Digital human models are used at different levels in the conception of vehicles (car, bus, coach, etc) or of the mobility of a person (i.e. pedestrian) either for ergonomics, accessibility and comfort design or for safety assessment. However, a unique Virtual Man able to assess correctly the comfort, the accessibility and the safety does not exist yet and will probably never exist since these two aspects of the vehicle design are facing to different scientific issues and require different modelling approaches. For instance, the biomechanical human body models for injury prediction based on the Finite Element (FE) methods have the potential to represent the human variability. They can also provide information that is complementary to what can be predicted with dummies or multi-body human models. Dummies and multi-body body human models are simplified human representations that can mostly predict a global kinematic response and global injury criteria. Because FE models include descriptions of anatomical components with their material properties, they are potentially capable of predicting complex deformations patterns when subjected to loading, including strain and stresses in tissues that can be correlated with injury risks to specific structures. These advanced models have been continuously improving for the past 10 years. Early models aimed at predicting the occupant kinematics during car crashes as well as some bone fractures. On the other hand, ergonomic evaluation of a product based on digital mock-ups together with digital human models (DHM), are more and more used in the early phase of product design in order to reduce the product development time and cost. In order to help the designer to evaluate the future product, the digital human should ideally behave like a real human being, not only in terms of anthropometry but also in terms of motion, discomfort perception and work related tissue injury. This requires a deep knowledge of the human functional capacities and a realistic modelling of the musculo-skeletal systems. These models are usually based on rigid multi-bodies approaches with some only few specific deformable parts. The creation of a human model corresponding to one and only one specific case is a long and expensive process if the model in question must be created from scratch while ensuring quality standards. In practice, digital biomechanical models are usually representative of an average population or even of some types of categories. For the creation of a model, it is important to respect the anatomical reality of the represented person. However, the existing numerical models are often based on a statistical mean (e.g. 50th percentile). Nevertheless, it is possible to obtain a person-specific predictive tool based on these means models from geometric deformations methods. These require medical-imaging acquisitions for the person. A true personalization of the models should not only be geometrical: the material properties are also to be adapted as much as possible to the targeted subject. In the process of routine use, it is essential to develop in vivo and non-invasive methods of measuring and determining material properties which will be simple and sufficiently precise. Finally, take into account factors such as the muscle tonicity, the mechanical interactions between organs, etc are all the necessary elements to achieve realistic person-specific models and biofidelic. To achieve the goal of the use of Biomechanical Human Models: Towards the virtual conception and assessment of the mobility, there is a last great challenge which is the merging of the different approaches for the human modelling. This will be probably achieved by developing a framework to connect and allow interacting together the different human models including behavioral (cognitive) models.

|